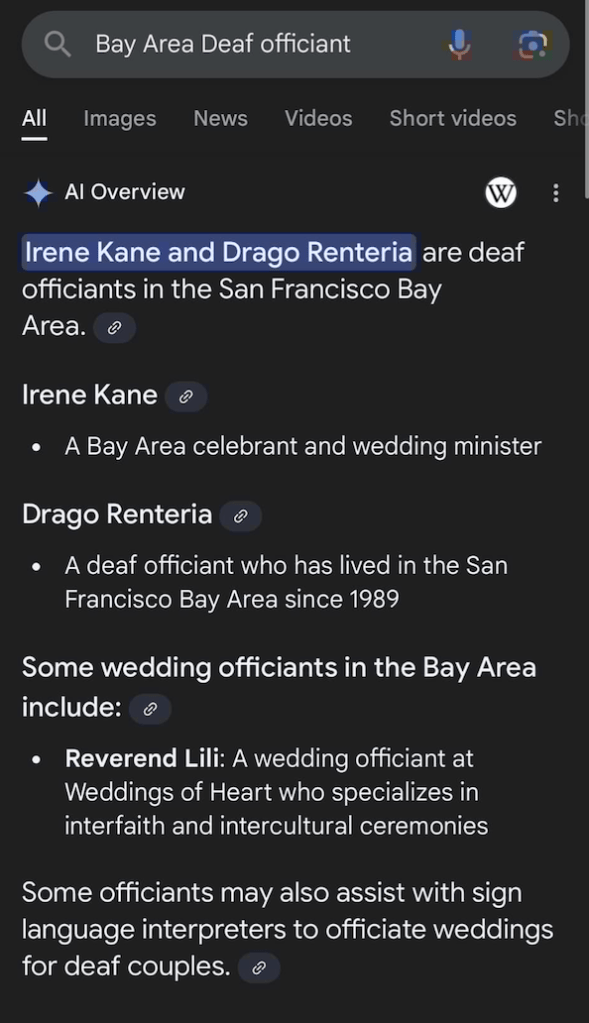

A Deaf lesbian couple reached out earlier today and inquired if I could officiate their upcoming wedding. They sent this screenshot from an online search they conducted:

I’m honored to have been considered, but I’m not an officiant, nor have I ever officiated a wedding. I’m also not aware of any deaf people that go by the name “Irene Kane” in the Bay Area (it’s a tight-knit community).

This is a reminder that AI-generated search results are sometimes full of bunk. When AI cannot find a specific answer, it often fabricates “facts.” This is referred to as “hallucinating.”

Given that the technology is still far from accurate, I find it both concerning and irresponsible that many web browsers have begun integrating AI-generated summaries into their search engine results.

That said, if I were in fact a wedding officiant, it would have been cool to officiate this.

Bsky Post / 03/08/25

You must be logged in to post a comment.